In this post, we will install Loki, a log aggregation system inspired by Prometheus. Loki is chosen just as an example app, which is used to show how we can apply Kustomize and Helm together ❤️. I think learning Helm & Kustomize is a good way to practice for your Certified Kubernetes Application Developer exam. You will be definitely working with a lot of YAML, so having a lot of knowledge how to process it always helps.

Loki has installation instructions using Helm available here. You can choose from different versions of Helm charts:

-

loki/loki-stack– deploys the whole observability: Prometheus, Grafana, Promtail, fluent-bit & Loki. loki/loki– Helm chart for just Loki, the log aggregator.loki/promtail– chart for Promtail, which is a log shipping agent.- l

oki/fluent-bit– deploys fluent-bit, which is a different log shipping agent.

In this tutorial, we will be using loki/loki and loki/promtail charts.

Helm

Let’s begin by adding Loki chart to our Helm repositories.

[web_code] helm repo add loki https://grafana.github.io/loki/charts helm repo update [/web_code]It’s important to note that we won’t be using helm install & helm upgrade install, which would directly install the chart into the cluster, but instead we will use Helm 3 template functionality.

I highly recommend using this approach, as this way you have a chance to look at the manifest configuration of your new software before applying to the cluster. This allows you to better familiarize with the new software, it’s components and configuration. Also you can check whether chart’s configuration is acceptable to your environment. Good things to check include:

- Role Bindings / Cluster Role bindings – make sure chart doesn’t get a god mode in your cluster.

- User – you don’t want your new software to run root, do you?

- Pod Security policies – if software needs special permissions, like host networking or accessing host volumes, I would expect that package includes Pod Security policies, which are limited.

- Pod Disruption budgets & affinities/anti-affinities – These features add additional layer of reliability to the new software.

- Network policies – I haven’t seen anyone doing this, but it would be great if the new software mapped out it’s connection patterns with Network policies.

If your chart doesn’t check all the marks, don’t worry, I will show how you can fill the gaps yourself.

Templating with Helm

One of big Helm strengths is templating. It allows setting your own values in values.yaml file or via command line --set "key1=val1,key2=val2,...". Additionally can also change the namespace using -n flag.

Now let’s begin by using helm template to generate some YAML:

You should see the generated manifests in the loki.yaml and promtail.yaml files. If something doesn’t suit your environment, take a look at the published chart’s values.yaml configuration file. This allows you to figure out whether your needed option can be changed. If it doesn’t Helm wont be able to help, but it’s still fine, we can use kustomize to do the changes. In Loki case we are lucky, the chart has a lot of configuration options. Take a look at loki values.yaml and promtail values.yaml.

One important note is just don’t edit generated files. If you edit these files, you will have a hard time updating it, as on every new update you will have to manually reapply same exact changes. But If you don’t touch it, you can safely pull in new changes using command line:

[web_code] helm repo update helm template loki -n monitoring loki/loki \ > loki.yaml helm template loki -n monitoring loki/promtail \ > promtail.yaml [/web_code]Now let’s take a look at loki.yaml generated files. In the Loki’s Statefulset definition you can see that by default it uses emptyDir to store the data:

volumes:

- name: config

secret:

secretName: loki

- name: storage

emptyDir: {}Let’s change it to use Persistent Volumes, because we want to actually store our logs. We can do that with Helm’s --set argument, as the chart exposes this option in it’s values.yaml:

persistence:

enabled: false

accessModes:

- ReadWriteOnce

size: 10Gi

annotations: {}We need to add --set "persistence.enabled=true,persistence.storageClassName=local-volume". This is how it looks:

By the way I figured out how to set storageClassName by looking at this statefulset.yaml template & not published values.yaml file. As sometimes authors forget to expose all the options in their values.yaml, so you have to check the actual templates. Let’s take a look at generated yaml. We can see that our persistent volumes claim got generated:

volumeClaimTemplates:

- metadata:

name: storage

annotations:

{}

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "10Gi"

storageClassName: local-storageCool. Looks like, our problem is solved. But if you look at the generated YAML, you can notice one minor thing that doesn’t add up. Generated Pod Security Policy still needs emptyDir:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: loki

namespace: monitoring

spec:

privileged: false

allowPrivilegeEscalation: false

volumes:

- 'configMap'

- 'emptyDir'

- 'persistentVolumeClaim'

- 'secret'Although there is no mention of emptyDir anywhere else. Looking at the chart template, it doesn’t have any options to fix that. It’s time we take out the big guns. Let’s use Kustomize.

Kustomize

Kustomize works completely differently from Helm. It takes a base manifest YAML and merges in your custom patch. The generated YAML from Helm will be our base, and we will patch it using our custom changes.

To start with kustomize you need to create kustomization.yaml and add loki.yaml as our base.

Now let’s create a loki-patch.yaml, which will remove emptyDir from PodSecurityPolicy.

We do this by creating a PodSecurityPolicy which has same name & namespace, but with just the volumes we want. Create a new file called loki-patch.yaml and add this:

Now let’s add it to our kustomization.yaml file and generate yaml:

You can see that newly generated yaml’s PodSecurityPolicy doesn’t have emptyDir anymore! We have fixed the problem!

Kustomize Generators

Let’s take a look at the generated yaml again. The last thing I don’t like is the generated secret:

apiVersion: v1

kind: Secret

metadata:

labels:

app: loki

chart: loki-0.28.0

heritage: Helm

release: loki

name: loki

namespace: monitoring

data:

loki.yaml: YXV0aF9lbmFibGVkOiBmYWxzZQpjaHVua19zdG9yZV9jb25maWc6CiAgbWF4X2xvb2tfYmFja19wZXJpb2Q6IDAKaW5nZXN0ZXI6CiAgY2h1bmtfYmxvY2tfc2l6ZTogMjYyMTQ0CiAgY2h1bmtfaWRsZV9wZXJpb2Q6IDNtCiAgY2h1bmtfcmV0YWluX3BlcmlvZDogMW0KICBsaWZlY3ljbGVyOgogICAgcmluZzoKICAgICAga3ZzdG9yZToKICAgICAgICBzdG9yZTogaW5tZW1vcnkKICAgICAgcmVwbGljYXRpb25fZmFjdG9yOiAxCiAgbWF4X3RyYW5zZmVyX3JldHJpZXM6IDAKbGltaXRzX2NvbmZpZzoKICBlbmZvcmNlX21ldHJpY19uYW1lOiBmYWxzZQogIHJlamVjdF9vbGRfc2FtcGxlczogdHJ1ZQogIHJlamVjdF9vbGRfc2FtcGxlc19tYXhfYWdlOiAxNjhoCnNjaGVtYV9jb25maWc6CiAgY29uZmlnczoKICAtIGZyb206ICIyMDE4LTA0LTE1IgogICAgaW5kZXg6CiAgICAgIHBlcmlvZDogMTY4aAogICAgICBwcmVmaXg6IGluZGV4XwogICAgb2JqZWN0X3N0b3JlOiBmaWxlc3lzdGVtCiAgICBzY2hlbWE6IHY5CiAgICBzdG9yZTogYm9sdGRiCnNlcnZlcjoKICBodHRwX2xpc3Rlbl9wb3J0OiAzMTAwCnN0b3JhZ2VfY29uZmlnOgogIGJvbHRkYjoKICAgIGRpcmVjdG9yeTogL2RhdGEvbG9raS9pbmRleAogIGZpbGVzeXN0ZW06CiAgICBkaXJlY3Rvcnk6IC9kYXRhL2xva2kvY2h1bmtzCnRhYmxlX21hbmFnZXI6CiAgcmV0ZW50aW9uX2RlbGV0ZXNfZW5hYmxlZDogZmFsc2UKICByZXRlbnRpb25fcGVyaW9kOiAwcw==If you base64 decode this, you will see:

auth_enabled: false

chunk_store_config:

max_look_back_period: 0

ingester:

chunk_block_size: 262144

chunk_idle_period: 3m

chunk_retain_period: 1m

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

max_transfer_retries: 0

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

schema_config:

configs:

- from: "2018-04-15"

index:

period: 168h

prefix: index_

object_store: filesystem

schema: v9

store: boltdb

server:

http_listen_port: 3100

storage_config:

boltdb:

directory: /data/loki/index

filesystem:

directory: /data/loki/chunks

table_manager:

retention_deletes_enabled: false

retention_period: 0sDecoded secret contains configuration for loki. Let’s say we want to change retention period. One option would be to go and edit values.yaml file, but I would recommend against it. Because kustomize has an awesome feature called secretGenerator / configMapGenerator. It allows you to avoid yaml in yaml and makes your changes to your secret make an actual rollout in your Kubernetes cluster.

In order to use secretGenerator we copy the base64 decoded secret and store it in a file. I’ve named my file loki-conf.yaml. Then I’ve changed retention_period from 0s to 1d. This is how my file looks:

Now let’s add it to kustomization.yaml:

kustomize build now will generate a new secret with name loki+HASH. The hash is computed from content of lok-conf.yaml. All the references to secret named loki will be replaced with the new name. When you change the secret, kustomize will generate new hash, which will cause a deployment in Kubernetes. You can also safely rollback to previous configuration as the old secret is still in the cluster.

Kustomize build

Kustomize brings a lot of benefits to the table. For example you can treat it as sort of compiler, which makes sure that manifests actually build and your custom patches work.

Let’s say upstream changes secret name from loki to loki-v2, but our custom patch still references the old name. If I try to kustomize build it will fail:

Error: merging from generator &{0xc0004e5170 { } {map[] map[] false} {{monitoring loki merge {[] [loki.yaml=loki-conf.yaml] []}} }}: id resid.ResId{Gvk:resid.Gvk{Group:"", Version:"v1", Kind:"Secret"}, Name:"loki", Namespace:"monitoring"} does not exist; cannot merge or replaceThis way Kustomize protects you by failing to build. The message is quite clear, you can’t apply your patch as secret named loki doesn’t exist. In the helm’s case if author changed the configuration option name, it will just fail silently:

For example, let’s mistype config option persistence.enabled:

helm template loki --namespace=monitoring --set "persistence.enabled-v2=true,persistence.storageClassName=local-storage" loki/loki > loki.yamlThis generates emptyDir version of loki with no errors.

GitOps

Also kustomize can combine different YAMLs into a single giant one. I typically recommend having one kustomization.yaml per namespace, so that kustomize build creates the whole namespace with all the applications & resources. For example:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: monitoring

secretGenerator:

- files:

- loki.yaml=loki-conf.yaml

name: loki

behavior: replace

namespace: monitoring

patchesStrategicMerge:

- loki-patch.yaml

resources:

- namespace.yaml

- loki.yaml

- promtail.yaml

- prometheus-pv.yaml

- prometheus.yaml

- prometheus-rbac.yaml

- blackbox-exporter.yamlThis way kustomize build will combine together Namespace definitions, Prometheus, Loki, Promtail & blackbox-exporter.

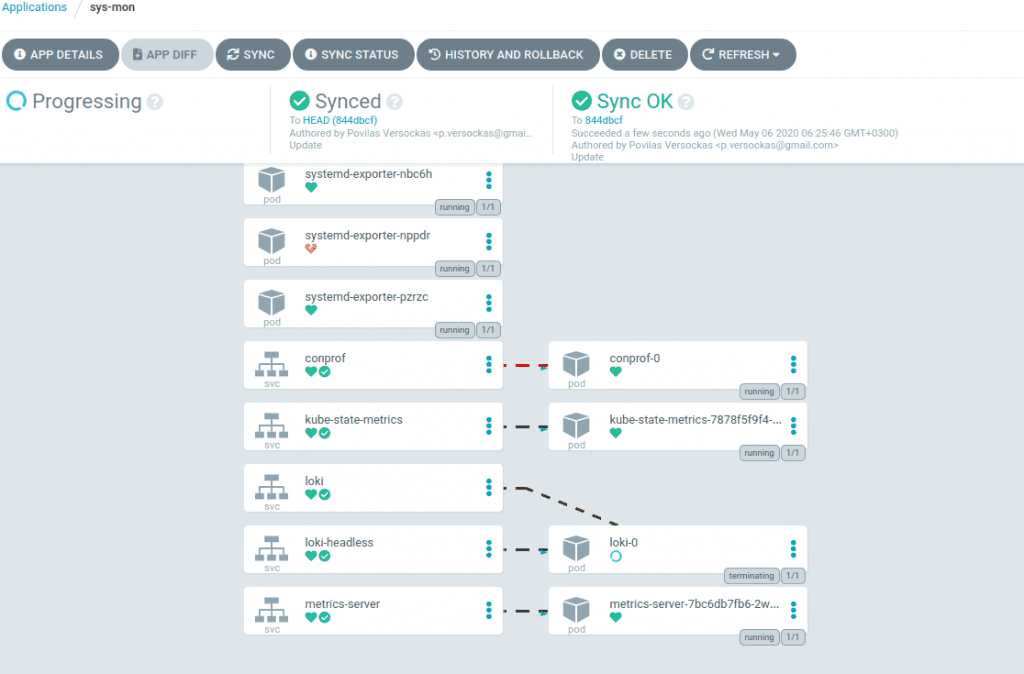

If you commit everything to git you can use GitOps model of deployment and use ArgoCD or Weave Flux to deploy it.

Personally I’m a big fan of Argo CD, they have developed a beautiful Web UI and have a good support for Kustomize.

In order to get started, add templated Helm manifests to git. Then add your kustomization.yaml with all the custom patches. Now you can fire up your Argo CD UI and add new application which points to your git repository. That’s it.

Argo CD allows you to choose different synchronization strategies. When first starting, I recommend using Manual sync, which will require you to click a button for synchronizing the changes to the cluster. Later when you feel safe with Argo, you can enable automatic synchronization. This way ArgoCD will keep pulling repository & doing kustomize build | kubectl apply -f into my Kubernetes cluster.

Issues

One thing to be careful about is Secrets. You typically don’t want to commit your plaintext/base64 encoded passwords into git. Sometimes Helm Charts allow you to skip secret creation. For example Postgres chart has a postgresql.createSecret option, MySQL chart has values.existingSecret option. In Loki’s case we are out of luck. There is no skip secret option and Kustomize won’t help us with that. As it doesn’t support resource removals. You can read more about it in this design doc. Right now for those charts, I just manually remove unneeded secrets and keep repeatedly doing that on each and every update.

Do you know a tool? Please let me know.

Conclusion

Generally this works great. Helm generally works well and when it doesn’t Kustomize helps. I highly recommend this approach of managing Kubernetes manifests, as it gives you some room to review configuration and make it ready for production. It’s certainly better than YOLO helm install the software and seeing what happens. If you do that, please do it first in minikube.

Have some free time? Consider applying for Certified Kubernetes Application Developer exam. You will learn a lot of valuable Kubernetes skills.

Thanks for reading. Hope you enjoyed the article. See you next time!